QuantumTracer UGV

Project Overview

The QuantumTracer implements autonomous RC car navigation using vision-based imitation learning with the ACT (Action Chunking Transformer) policy, built on top of the LeRobot framework. The system learns to drive autonomously by observing human demonstrations through a distributed inference architecture.

This project demonstrates the practical application of imitation learning for real-world autonomous navigation, featuring a complete pipeline from data collection to deployment with safety mechanisms and real-time inference capabilities.

System Architecture

Distributed inference: Raspberry Pi captures data, laptop runs AI inference

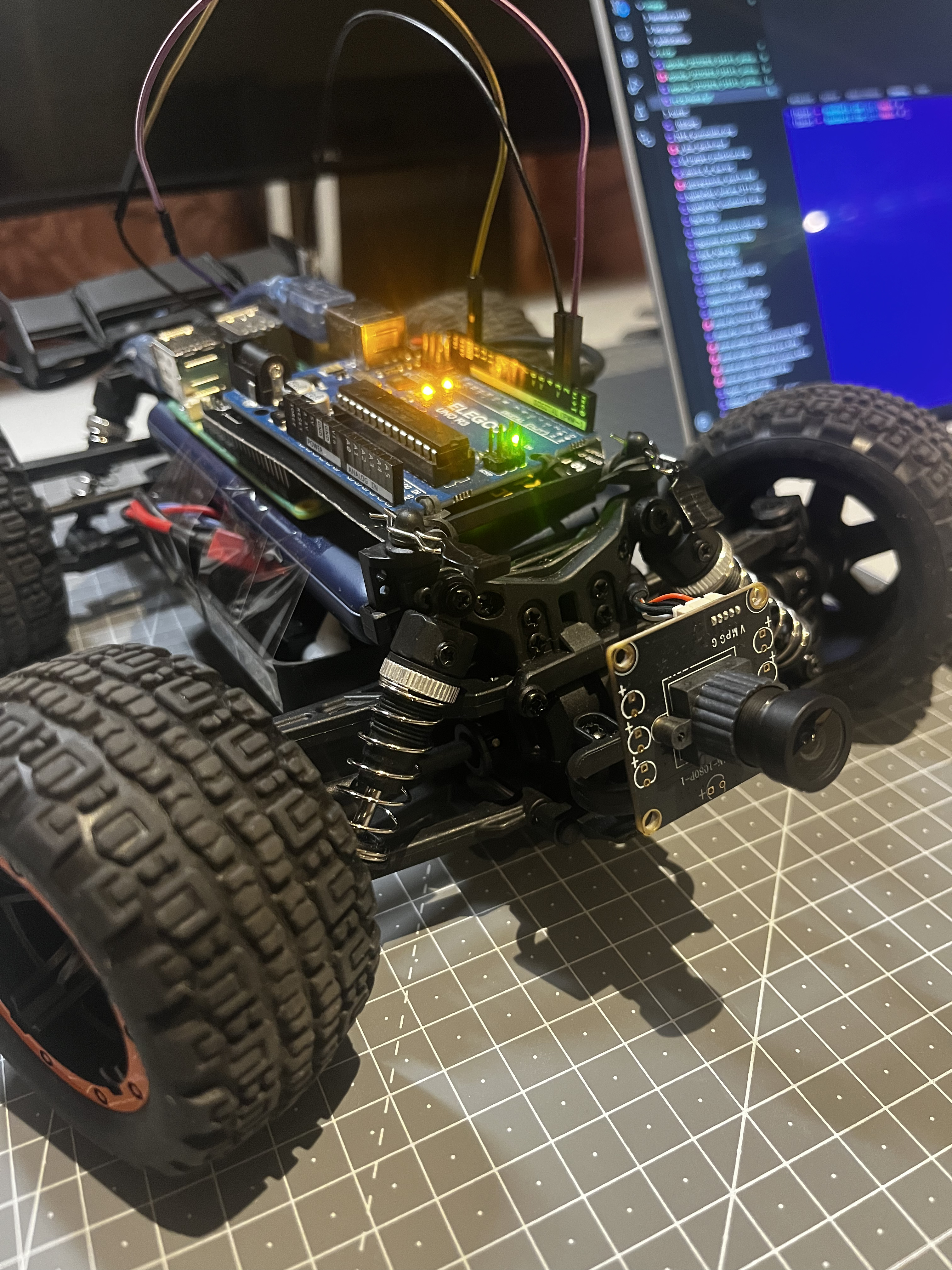

Complete QuantumTracer system with Raspberry Pi and camera mount

Data Recording for Imitation Learning

Sample driving episode recording (video, PWM throttle and steering) during teleoperation

Technical Implementation

Distributed Architecture

- Raspberry Pi 5: Camera capture, motor control, and real-time communication

- Laptop/GPU: High-performance AI model inference with CUDA acceleration

- WiFi Communication: Real-time data transfer with optimized protocols

- Emergency Systems: Hardware and software safety mechanisms

Hardware Integration

- FTX Tracer Truggy: Modified RC car with signal tapping for control

- PWM Signal Control: Direct servo control via GPIO (steering + throttle)

- Front Camera: 640x480@30fps vision input for navigation

- Logic Level Conversion: Safe 5V to 3.3V signal conversion

- pigpio Daemon: Microsecond-accurate PWM timing control

Machine Learning Pipeline

- ACT Policy: Action Chunking Transformer for continuous control

- LeRobot Framework: Robust robotics infrastructure and training

- Imitation Learning: Learning from human driving demonstrations

- Vision Processing: Real-time image preprocessing and inference

- TensorBoard Monitoring: Comprehensive training visualization

Performance Metrics

System Specifications

- Inference Latency: <50ms per action (Raspberry Pi 5)

- Training Efficiency: Good performance with 10-20 episodes

- Model Size: ~45MB ACT policy checkpoint

- Control Precision: Steering range [-1.0, 1.0], Throttle [0.0, 1.0]

- Network Performance: ~33KB per frame at 30fps

Training Results

- Dataset: 76 episodes, 26,064 synchronized samples

- Model Architecture: SimpleACTModel (23.6M parameters)

- Training Duration: ~25 epochs with comprehensive logging

- Hardware: NVIDIA RTX 4060 Laptop GPU training

- Validation: Real-time performance testing in controlled environments

Key Features

Complete Pipeline

End-to-end system from demonstration recording to autonomous deployment with a single command interface for record → train → deploy workflow.

Safety Systems

Multiple safety layers including emergency stop capabilities, confidence-based speed control, network error handling, and hardware PWM limits.

Real-time Inference

Optimized distributed computing with GPU acceleration on laptop and efficient edge processing on Raspberry Pi for sub-50ms response times.

Open Source Integration

Built as an extension to the LeRobot framework, contributing robotic arm integration and UGV capabilities to the broader robotics community.

Deployment Architecture

Phase 1: Data Collection

Record human driving demonstrations using RC controller input while simultaneously capturing front camera vision data and control signals.

Phase 2: Training

Train ACT policy on laptop with GPU acceleration using TensorBoard for monitoring convergence and validation performance.

Phase 3: Autonomous Operation

Deploy trained model with distributed inference: Raspberry Pi handles sensor input and motor control while laptop provides AI predictions via WiFi.