May 2025

A Thousand Worlds and Species: Adding Human Life to World Models

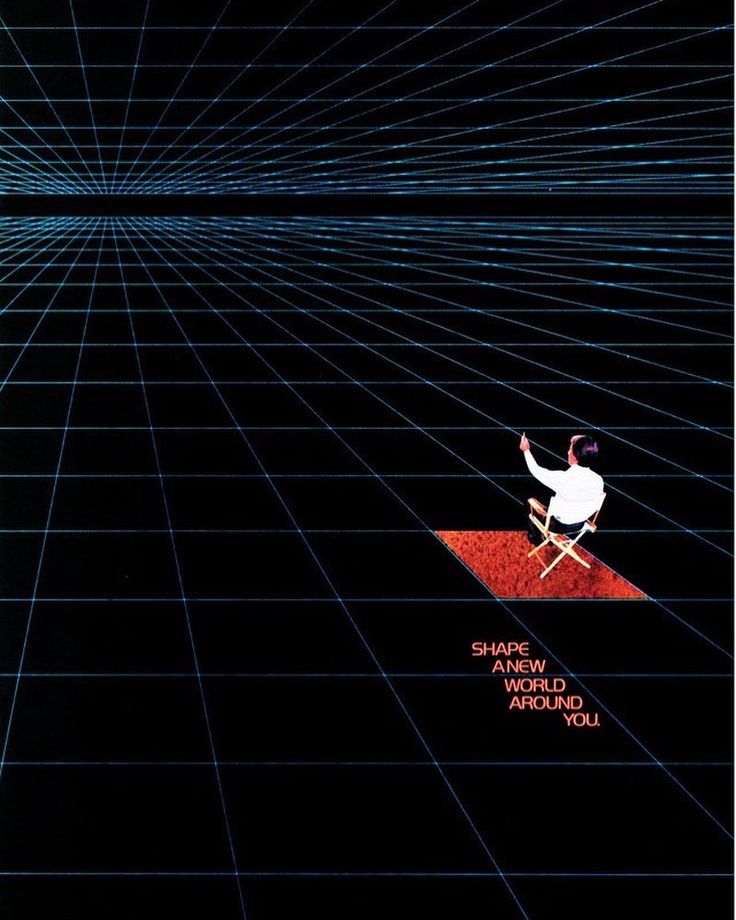

For decades, the holy grail of computer science has been the Turing Test – the idea that a machine's conversation could be indistinguishable from a human's. While we seem to have reached a point where AI language models achieve this, often prompting us to shrug off breakthroughs as "just another Tuesday," the physical world presents a far greater challenge: the Physical Turing Test - where you can't tell if a physical task, like cleaning up a messy room or preparing a meal, was done by a human or a machine.

Achieving this level of physical dexterity and common sense in robots is incredibly difficult. One major hurdle lies in the data needed to train them. While language models feast on the internet's vast corpus of text, roboticists face a far more constrained reality. The question becomes: how do we fuel robotic learning at scale?

And beyond even this challenge lies what we might call the Physical Social Turing Test – where a humanoid robot's physical social interactions become indistinguishable from those of a human. This ultimate test would require not just mastery of tasks, but of the subtle physical language of human interaction itself.

The Problem with Human Fuel

Language model researchers might feel they are running out of internet data, calling it the "fossil fuel of AI". However, roboticists face an even starker reality. We don't even have this kind of fossil fuel readily available. Data for robot training, such as the continuous joint control signals needed to direct a robot's movements over time, cannot be scraped from the internet. You won't find this information on Wikipedia, YouTube, or Reddit. This data must be collected directly.

A common method for collecting this data is teleoperation, where a human, perhaps wearing a VR headset, remotely controls a robot to teach it tasks. While sophisticated, this process is described as "very slow and painful" and "doesn't scale at all". This real robot data is referred to as "human fuel," which is considered "worse than the fossil fuel" because it relies on direct human effort.

Furthermore, data collection is limited to at most 24 hours per robot per day, and in practice, much less, as both humans and robots tire. This reliance on human-generated data is a significant bottleneck that prevents robots from achieving the scale needed for true general-purpose capabilities.

Simulation: The Nuclear Energy for Robotics

To overcome this limitation and find the "nuclear energy for robotics," we must enter simulation and train outside the physical world.

Simulation provides two critical advantages that enable learning at a scale impossible with human data alone:

- Speed: Simulation environments can be run at 10,000 times faster than real time. This means a single GPU can simulate 10,000 environments running in parallel for physics simulation, dramatically accelerating the training process. For example, humanoid robots were able to undergo the equivalent of 10 years' worth of training in just two hours of simulation time to learn walking.

- Diversity (Domain Randomization): Running 10,000 identical environments isn't sufficient. To create robust policies, you must vary parameters like gravity, friction, and weight across these simulations. This technique is known as domain randomization.

The power of simulation lies in this principle: if a neural network can learn to control a robot and solve tasks in a million different simulated worlds, it becomes highly likely that it will also be able to solve the million-and-first world, which is our physical reality. The goal is to ensure that our physical world is squarely within the distribution of the diverse training environments created in simulation.

This allows for policies trained entirely in simulation to be applied directly to real robots without needing any fine-tuning – known as zero-shot transfer. This has been demonstrated for complex tasks like dexterous manipulation, robot dog balancing, and even sophisticated whole-body control for humanoids using surprisingly compact neural networks (1.5 million parameters) capable of "systemwide reasoning".

Generating Simulations: Creating New Worlds for Learning

Early simulation efforts (Simulation 1.0) often involved building a digital twin – a meticulous, one-to-one copy of the robot and its environment. While effective for zero-shot transfer, building these digital twins is described as "very tedious and manual," requiring significant human effort to model robots, environments, and everything else.

To truly break free from human limitations and scale diversity, the next step is to generate parts, or even all, of the simulation.

This leads to generative simulation, creating worlds for robots to learn in that go far beyond handcrafted digital twins.

Building Simulation Systems: Practical Approaches

Moving from concept to implementation, how are these simulation systems actually built?

- Parallelization: Modern simulation frameworks must be capable of running thousands of environments simultaneously. A single GPU can simulate 10,000 environments in parallel, enabling the massive scale needed for effective reinforcement learning.

- Domain Randomization: Beyond just running multiple simulations, you need to systematically vary the parameters across these environments. This includes physical properties (gravity, friction, mass), visual properties (textures, lighting), and task variations (object placements, goals). This randomization is what ensures robustness and real-world transfer.

- Reinforcement Learning Integration: These simulation environments must interface cleanly with reinforcement learning frameworks, allowing policies to be trained at scale. The goal is to train neural networks that can perform "systemwide reasoning" for whole-body control, even with relatively compact architectures (1.5 million parameters).

The most promising approach for the future appears to be a hybrid that combines the computational efficiency of classical physics simulation with the diversity and flexibility of neural world models, creating a simulation ecosystem that truly breaks free from the limitations of human-generated data.

The Human Element: The Next Frontier of Simulation

While we've made tremendous progress in simulating physical environments for robots to understand objects, physics, and tasks, there's a critical missing element in most of these simulations: humans. The current challenge of robots understanding the world is approaching a solution through the simulation techniques described above. However, these simulated worlds often exist in a peculiar vacuum—environments without the very beings that create and use the robots.

This presents the next major frontier in robotics: understanding and interacting with humans and other living beings in the physical world. While AI systems have become remarkably adept at understanding our thoughts through language and internet data, robots will need to learn how to physically interact with humans through experience—to read our body language, anticipate our movements, collaborate on tasks, and navigate social spaces safely.

The Simulation Challenge: Beyond Empty Worlds

How do we model life and human behavior in simulation? This presents unique challenges that go beyond domain randomization of physical properties:

- Biological Diversity: Living beings demonstrate incredible variation in how they move, communicate, and respond. Unlike physics, which follows consistent laws, biological behavior is influenced by species, instincts, emotions, intentions, and countless other factors that vary across the "thousand species +1" that includes humans.

- Ethical Considerations: Creating simulations involving human-robot interactions raises important questions about consent, privacy, and accurate representation. What data should we use to model human and animal behaviors, and how do we ensure it represents the full spectrum of life?

- Embodied Intelligence: Robots need to understand not just language and images, but also the subtle physical cues that govern interaction with living beings—proximity comfort, gesture meaning, and appropriate physical responses across different species and contexts.

Approaches to Life-Inclusive Simulation

Several promising approaches could help robots go beyond "worlds without animal life" to understand the "thousand species +1" that includes humans:

- Life Behavior Models: Just as we've developed physics engines for simulation, we need sophisticated biological behavior engines that can generate realistic, diverse responses from humans, animals, and even plants. These could build on existing research in computational models of behavior, enhanced with large-scale biological and ethological data.

- Multi-Agent Ecological Simulation: Creating environments where multiple agents (both AI and simulated living beings) interact could generate emergent ecological and social behaviors. This approach would allow robots to learn interaction patterns through repeated experience with diverse life forms.

- Hybrid Reality Training: Combining simulation with real-world interaction in controlled environments could offer a middle ground. Techniques like augmented reality could allow robots to interact with real humans and animals while still maintaining the safety and scalability advantages of simulation.

- Learning from Natural World Media: The vast corpus of nature documentaries, human films, and videos capturing real-world interactions could serve as a training ground for robots to understand the dynamics of the living world, much as language models have learned from text.

The most promising systems will likely combine these approaches, creating what we might call Life Randomization—ensuring robots encounter such a diverse set of living beings, behaviors, and ecological scenarios in simulation that they can handle any real-world interaction they might encounter. Unlike traditional domain randomization that focuses on physical properties, Life Randomization would introduce the incredible diversity of biological responses, adaptations, and social dynamics that characterize our living world.

The Physical Social Turing Test

As we advance in this direction, we can formalize a new benchmark: the Physical Social Turing Test. While the original Physical Turing Test focuses on task performance, this extended test evaluates whether we can distinguish between humanoid robots and actual humans based on their social interactions in the physical world. This isn't just about a robot performing tasks correctly—it's about the quality of presence, the naturalness of movement, the appropriateness of social distance, the subtlety of gestures, and the fluid coordination in shared physical spaces.

Passing this test would require robots to master what anthropologists call "embodied cognition"—the way humans think through their bodies and communicate through movement. A robot passing the Physical Social Turing Test wouldn't just walk like a human or manipulate objects like a human; it would navigate social spaces with the same unconscious grace that humans do—maintaining appropriate distance in elevators, smoothly passing objects in collaborative tasks, using micro-expressions and body language that feel natural rather than uncanny.

Conclusion

By moving beyond the constraints of human-generated data and embracing the power of generative simulation, robotics can unlock the scale and diversity needed to build capable, versatile physical AI systems that can navigate and interact with our complex world.

The path from today's limited robotics capabilities to a world where robots pass both the Physical Turing Test and the more nuanced Physical Social Turing Test requires a fundamental shift in how we approach robot learning. Simulation provides the "nuclear energy" that powers this transformation, offering both the scale and diversity needed to build truly general-purpose robots.

Randomization represents the next evolution in this journey—moving from simulating objects and physics to simulating the rich complexity of living beings and their social dynamics. When humanoid robots can engage with us in ways that are indistinguishable from human interaction, we will have truly bridged the gap between the digital and physical worlds.

Just as language models have revolutionized our digital interactions, simulation-trained robots stand poised to transform our physical world. When that happens—when a robot's actions and social behaviors become indistinguishable from a human's—perhaps we'll greet that milestone too as "just another Tuesday." But the journey there, built on the foundation of generative simulation and Life Randomization, will represent one of the most profound technological transformations in human history.